Bot Management

Webex Bot Builder platform provides developers with various tools to test and manage their bots during or after the development phase. The primary sections which provide these capabilities are:

Testing, curation, sessions and history sections in Q&A bots for bot management.

Testing

As the scope of a bot increases over time, sometimes the changes we make to improve one or more areas of the bot’s logic and NLU may negatively impact other areas in the same bot. IMIbot platform provides a built-in one-click bot testing framework that is extremely helpful in testing a large set of test cases easily and quickly.

The test cases can be configured by entering a message and an Expected Template in the respective columns. A test case may consist of a series of messages, which can be useful for testing flows.

Defining tests

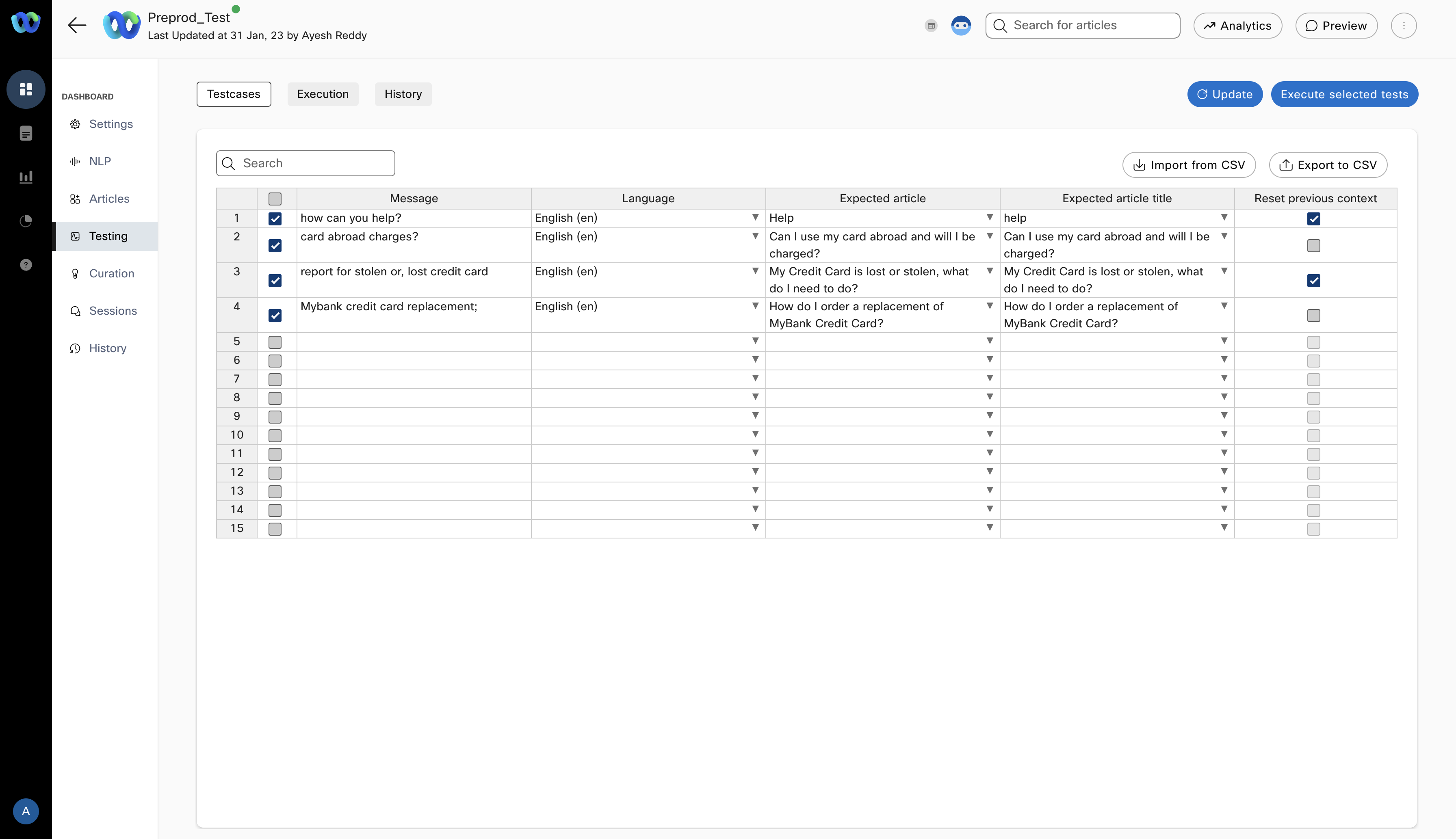

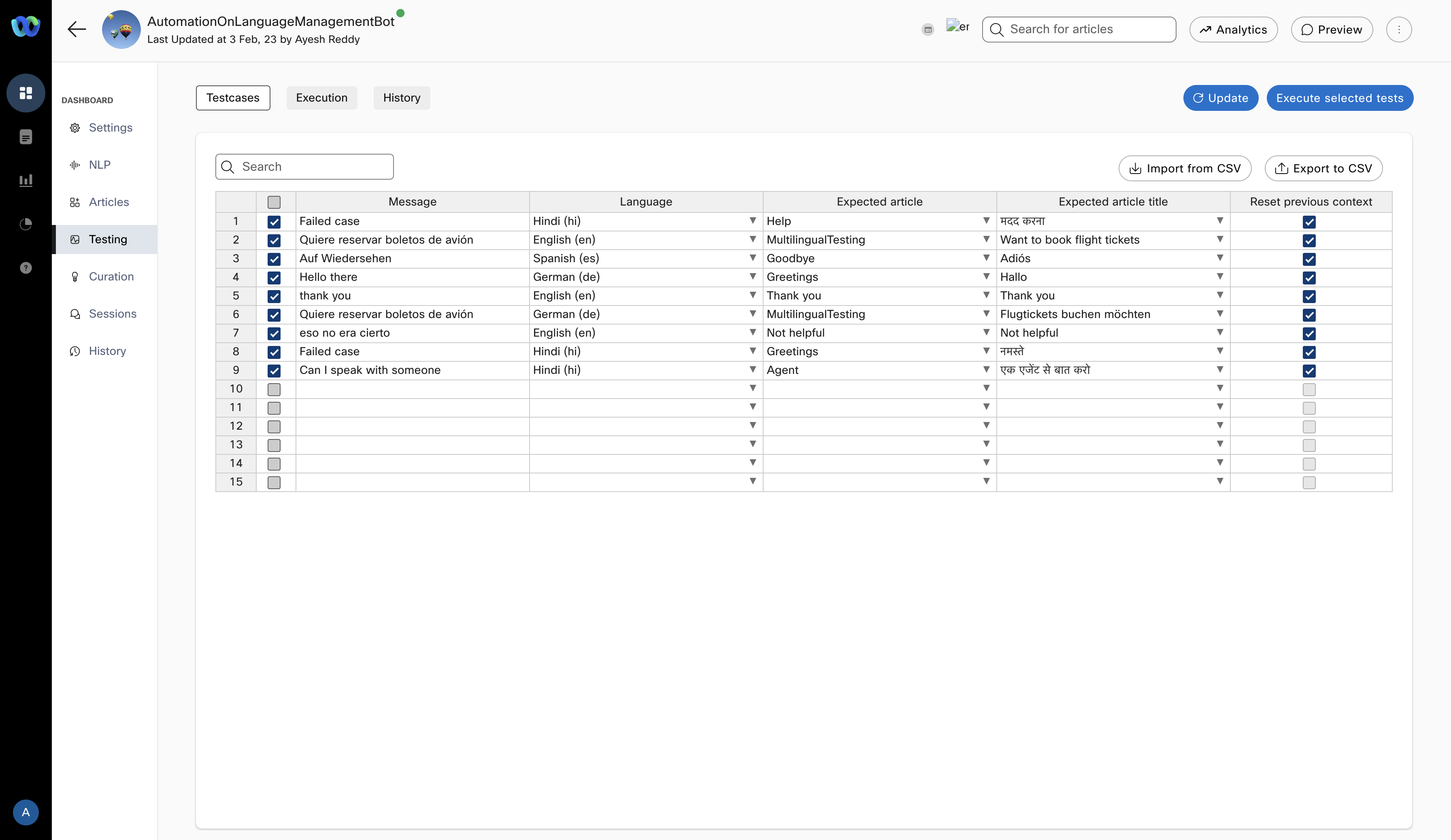

Tests can be defined in the Test cases tab on the Testing screen of a Q&A, task, and smart bot as shown below.

Defining and managing test cases in Q&A bots

Each row in the above table is an individual test case where you can define:

- Message: This is the sample user message and should contain the kind of messages you expect users to send to your bot

- Expected article: The article whose response should be displayed for the corresponding user message. This column comes with a smart auto-complete feature to suggest matching articles based on the text typed to that point.

- Reset previous context: Whether the bot’s existing context at that point should be cleared before executing the test case or not. When the context is set to be cleared in the last column for a given test case, that user message will be simulated in a new bot session so that there is no impact of any pre-existing context (in the data store, etc.) in the bot.

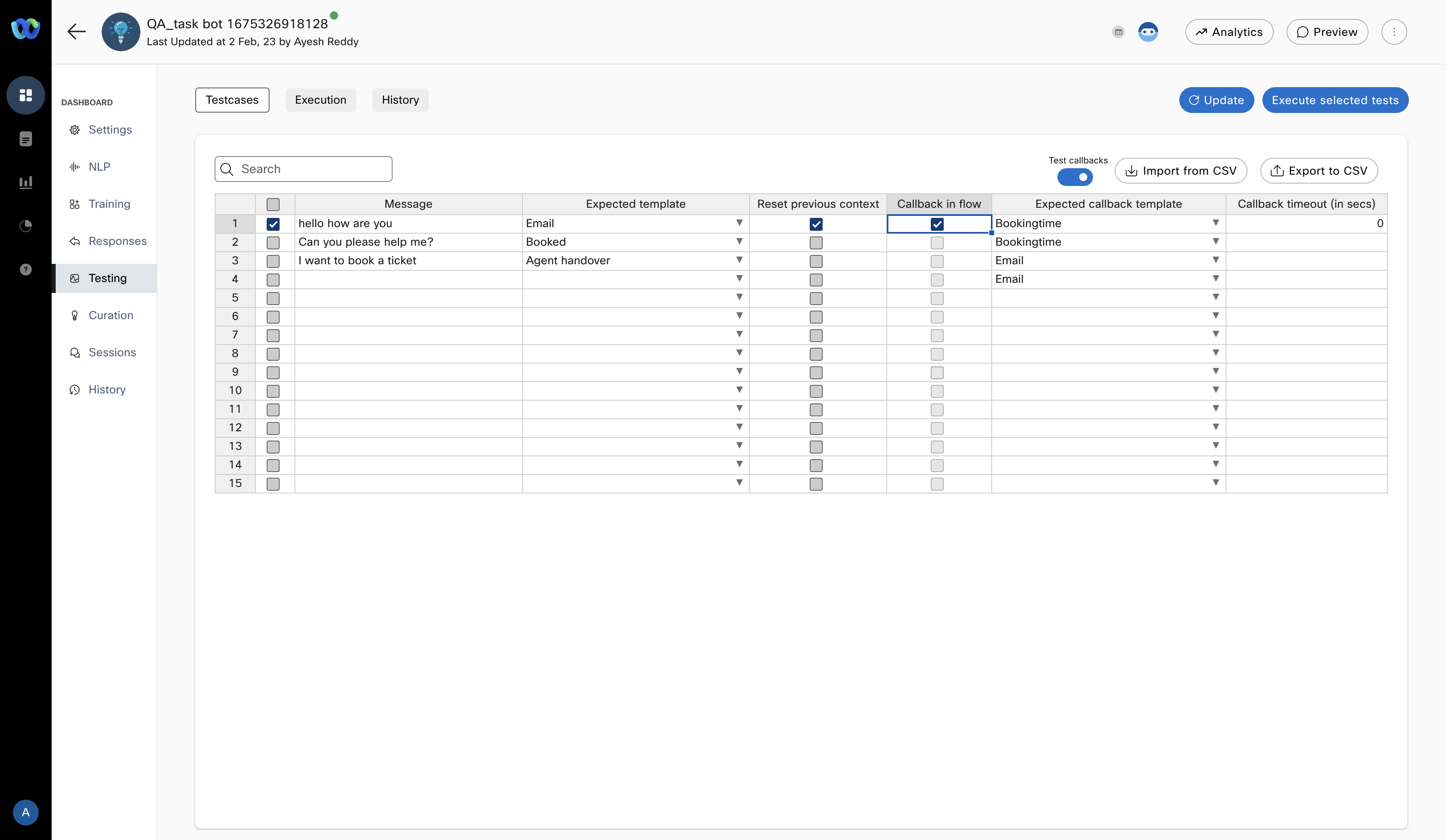

For task bots and smart bots, the process for defining test cases is very similar to that of Q&A bots with the only difference being that instead of selecting an article against a sample user message, a response template key needs to be defined there.

For all bots, users can now run selective testcases.

Defining and managing test cases in Task bots

Advanced task bot flows that use our workflows feature can also be tested by switching on the “Test callbacks” toggle on the top right. Switching this toggle on brings up 2 new columns into the table as shown below.

Test cases in Task bot with callback testing enabled

For those intents that are expected to result in a callback path, you just need to check the “Callback in flow” box in that row and configure the template key that is expected to be triggered in the “Expected callback template” column as shown in the image above.

For all the above scenarios, in addition to manually defining the test cases, users can also import test cases from a CSV file. Please note that all existing cases will be overwritten whenever test cases are imported from CSV.

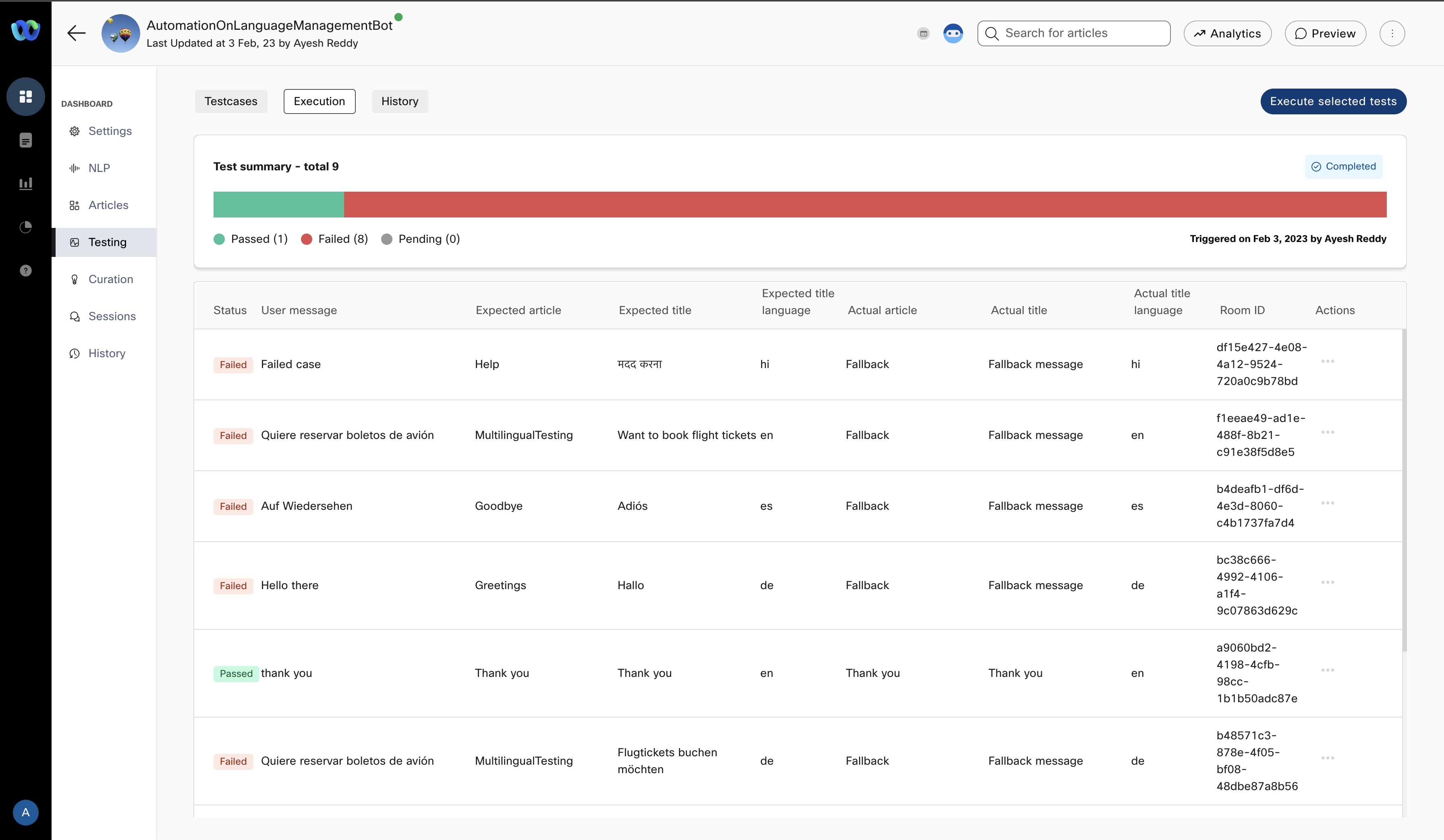

Executing tests

Tests defined on the “Test cases” tab can be executed from the “Execution” tab. By default, this screen shows the result of the last test execution or loads up empty if it is the very first time that screen is loaded for a given bot. Upon clicking the “Execute tests” button, all the test cases defined on the previous tab will be run sequentially and the result of each test case run is shown next to the test case as and when execution for that test case is completed.

A test run can be aborted midway if needed by clicking on the “Abort run” button on the top right.

Test execution in Q&A bots

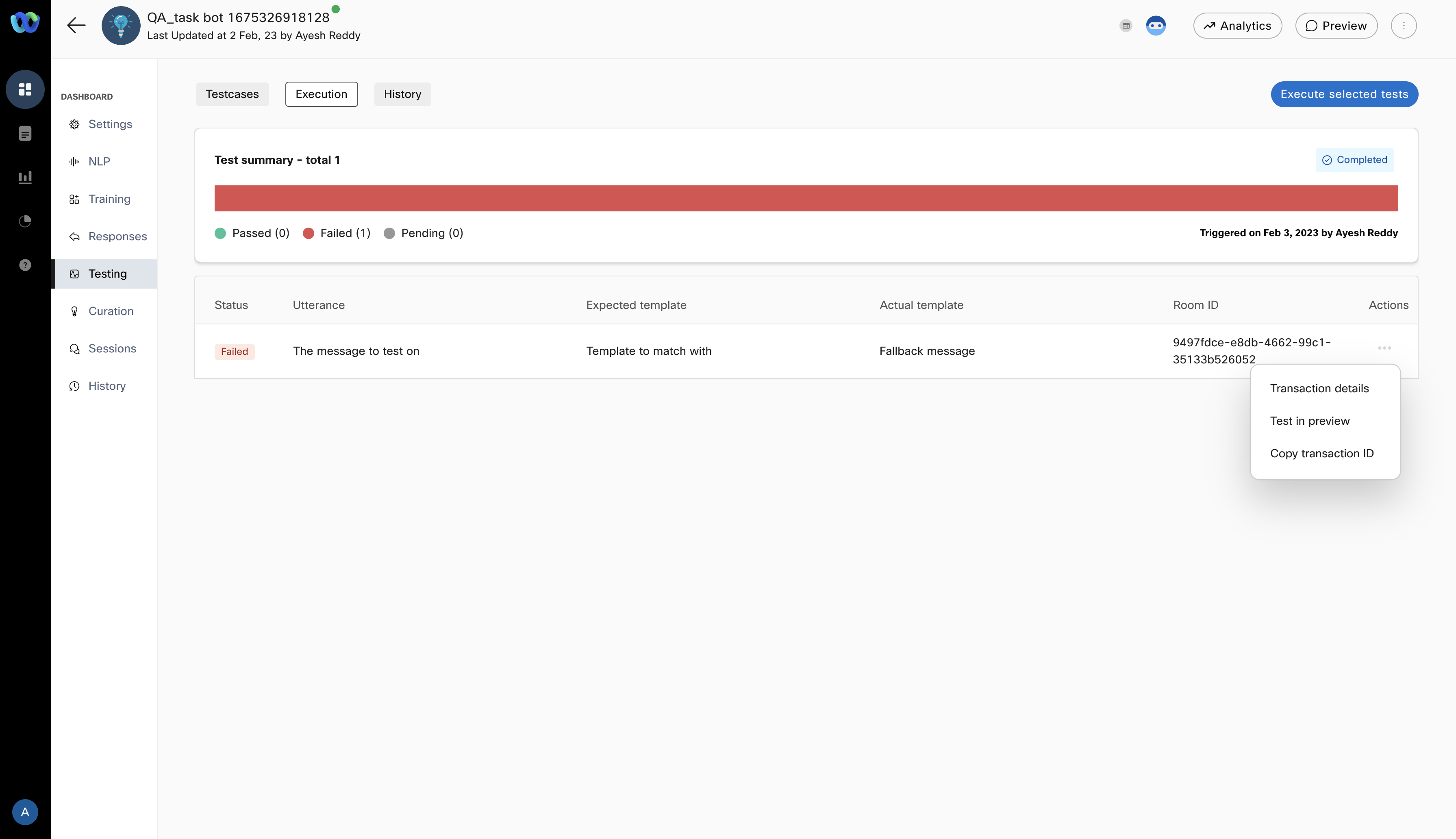

The session id in which a test case is run is also displayed in the results for quick cross-reference and all these transactions can be viewed in a session view by selecting the Transaction details menu option in the Actions column.

Each test case can also be launched in preview for testing manually by selecting the Test in preview menu option in the Actions column.

Actions in test case execution

Execution with callbacks

There is a 20-second timeout imposed by the platform to wait for a callback to be triggered beyond which the test case will be marked as failed.

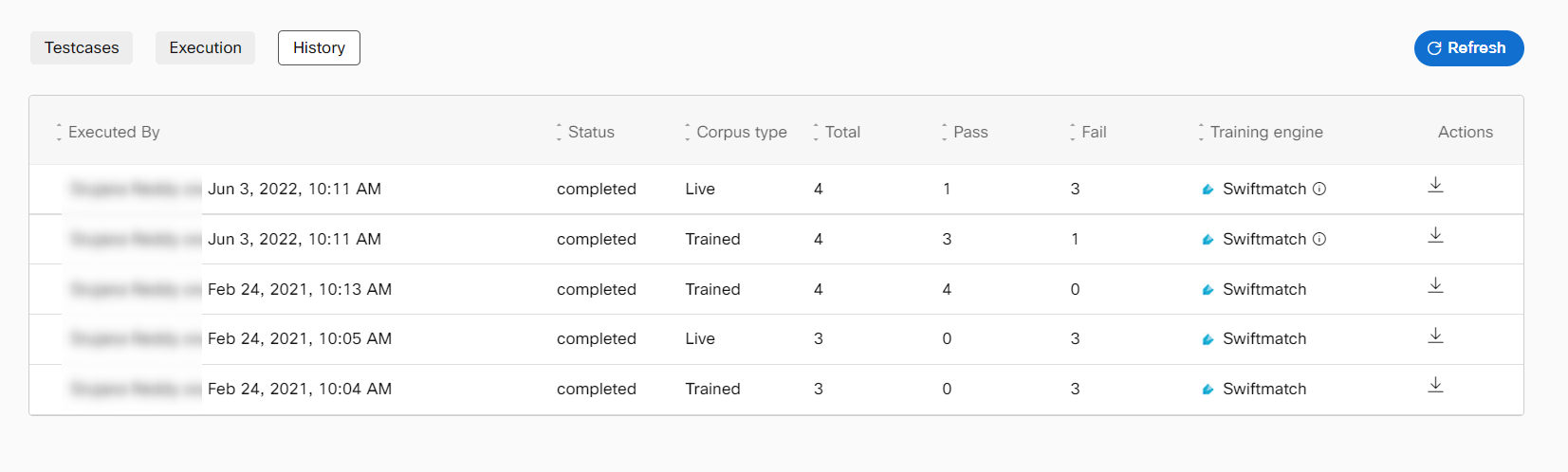

Execution history

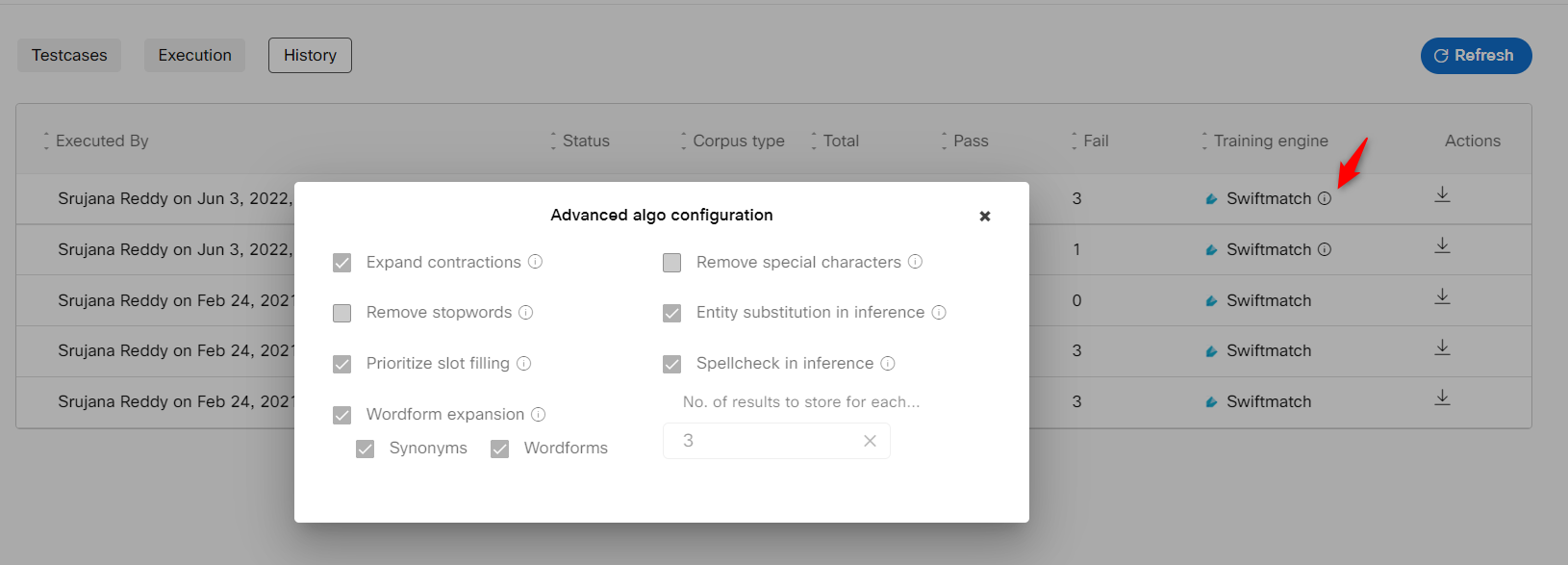

All test case executions are available to be viewed on-demand in the “History” tab. All the relevant data corresponding to execution can be downloaded as a CSV file for offline consumption, like attaching it as a proof of success. Bot developers can view the engine and Algo configuration settings that were used at the time of

executing test cases for each execution instance. This allows developers to choose the optimal engine and config for the bot.

History of test executions

Click on the Info icon corresponding to the training engine with which the corpus was trained to view the Advanced algorithm configuration settings that were configured at the time of test case execution.

Sessions

This section displays the history of sessions established with the consumers. Each session is displayed as a record that contains all the messages of the session. The messages of a required session or all the sessions can be downloaded to a CSV file. This information is useful to audit, analyze, and improve the bot.

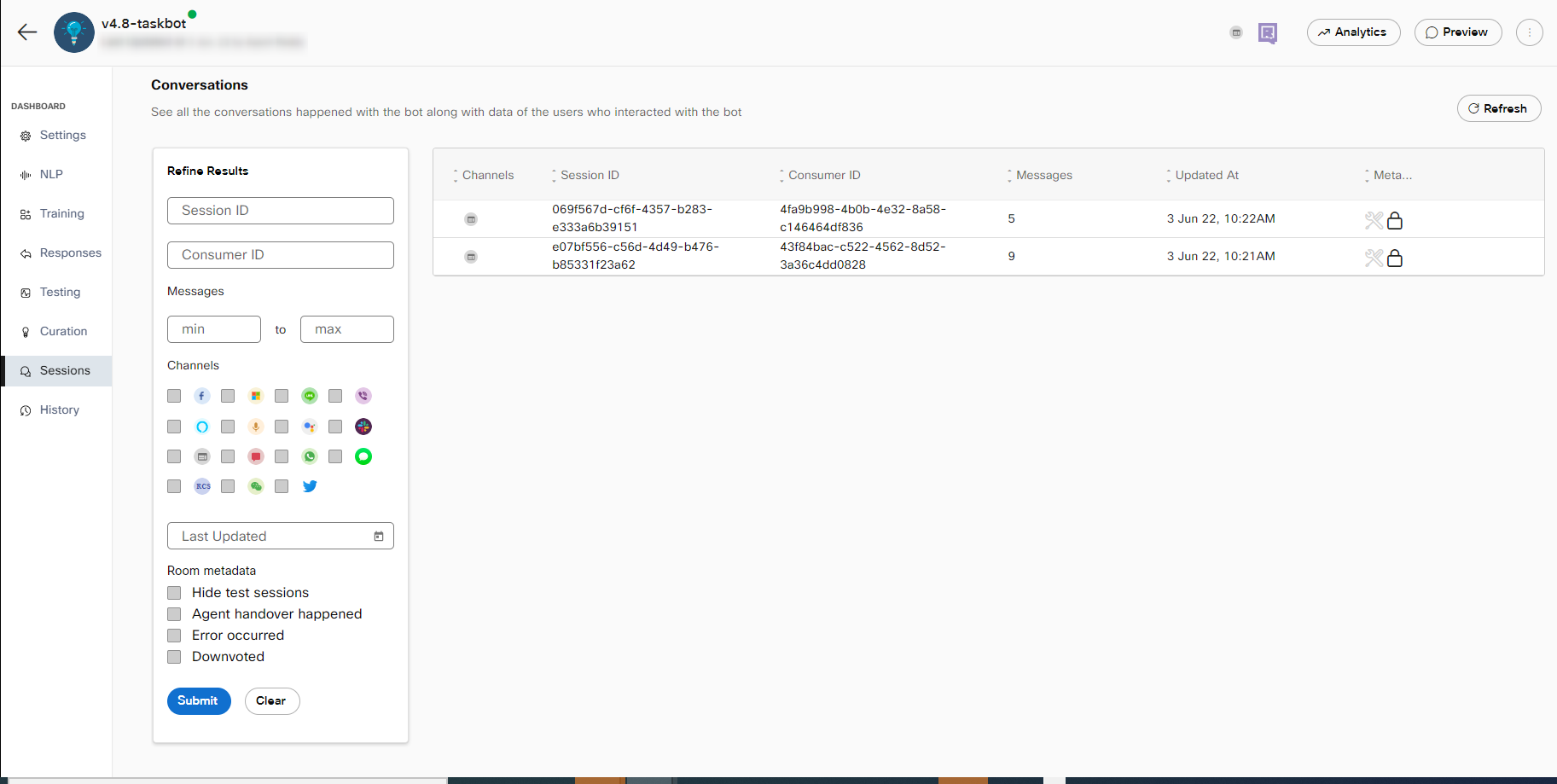

Sessions in a Q&A bots

The sessions table shows a list of all the sessions/rooms created for that bot. The table gets paginated if there are more rows than can be accommodated in one screen. Any of the fields in the table can be sorted or filtered using the 'refine results' section on the left-hand side. The fields which are present represent the following information about any particular session:

- Channels: Channel where the interaction took place

- Session ID: The unique room id or session id for a conversation

- Consumer Id: The consumer id of the consumer who interacted with the bot

- Messages: Number of messages exchanged between the bot and the user.

- Updated At: Time of the room closure

- Metadata: Contains additional info about the room.

- Hide test sessions: Select this check box to hide the test sessions and display only the list of live sessions.

- Agent handover happened: Select this check box to filter the sessions that are handed over to an agent. If agent handover happens, it displays the headphone icon indicating the handover of the chat to a human agent.

- Error occurred: Select this check box to filter the sessions in which error occurred.

- Downvoted: Select this check box to filter the downvoted sessions.

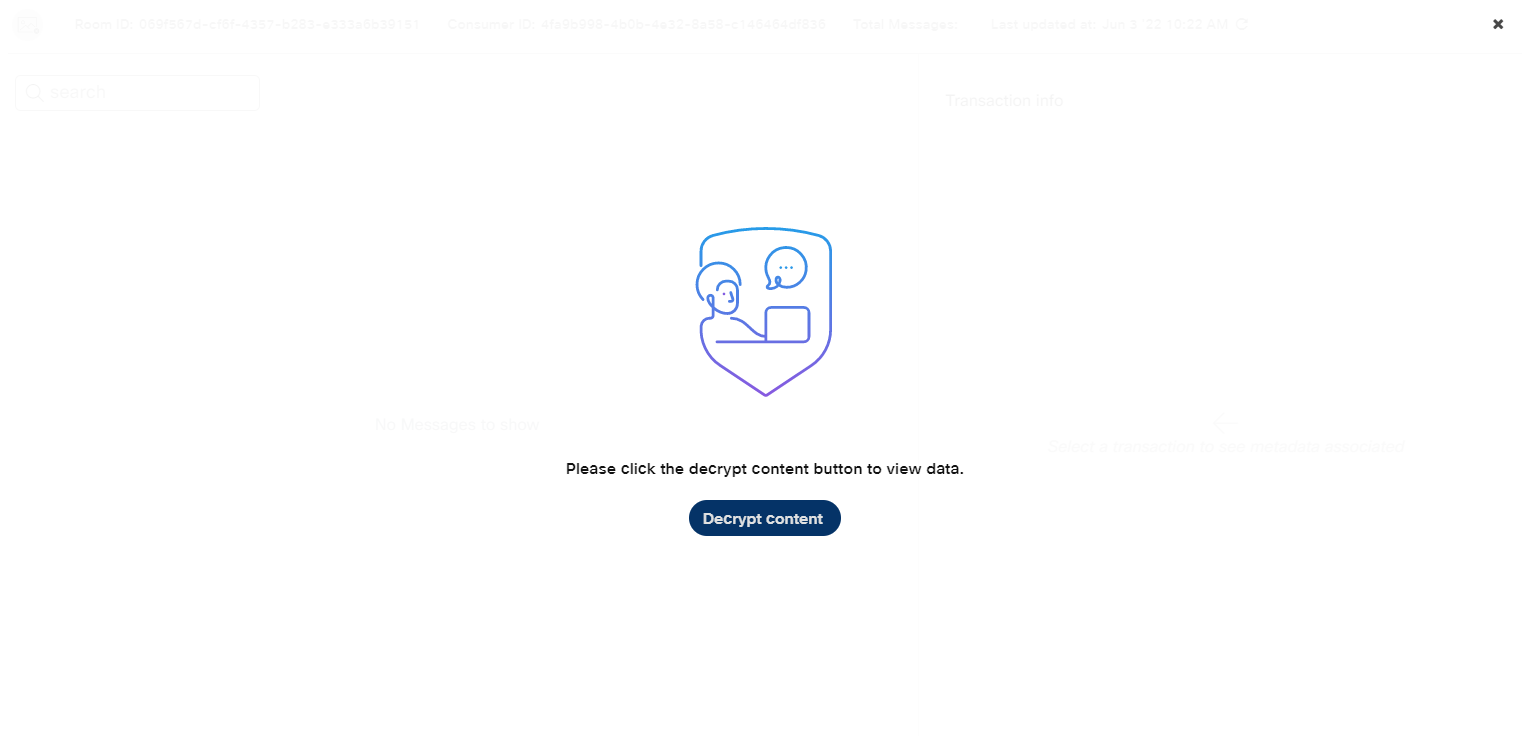

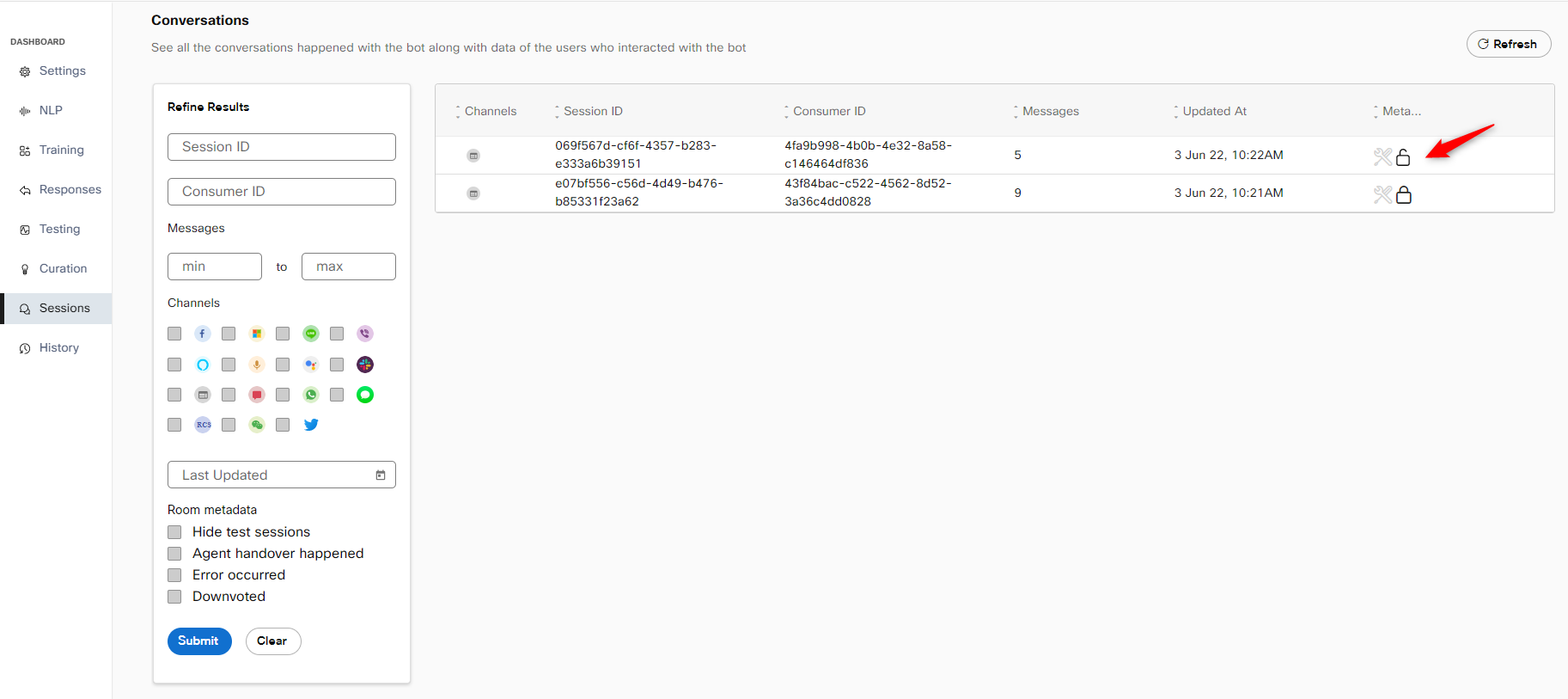

On clicking on a row in the sessions table the detail view for that session to unlock the session details. You can also view the lock icon corresponding to the session. The permission to decrypt sessions is granted at the user level. If the decrypt access toggle is enabled, the user can access any session using the Decrypt content button. However, this functionality is applicable only when the Advanced data protection is set to true or enabled in the backend.

Unlock icon indicates the session is in the decrypted state

Click the Decrypt content to view the session details.

Session details on clicking a particular session (session data is visible after decryption)

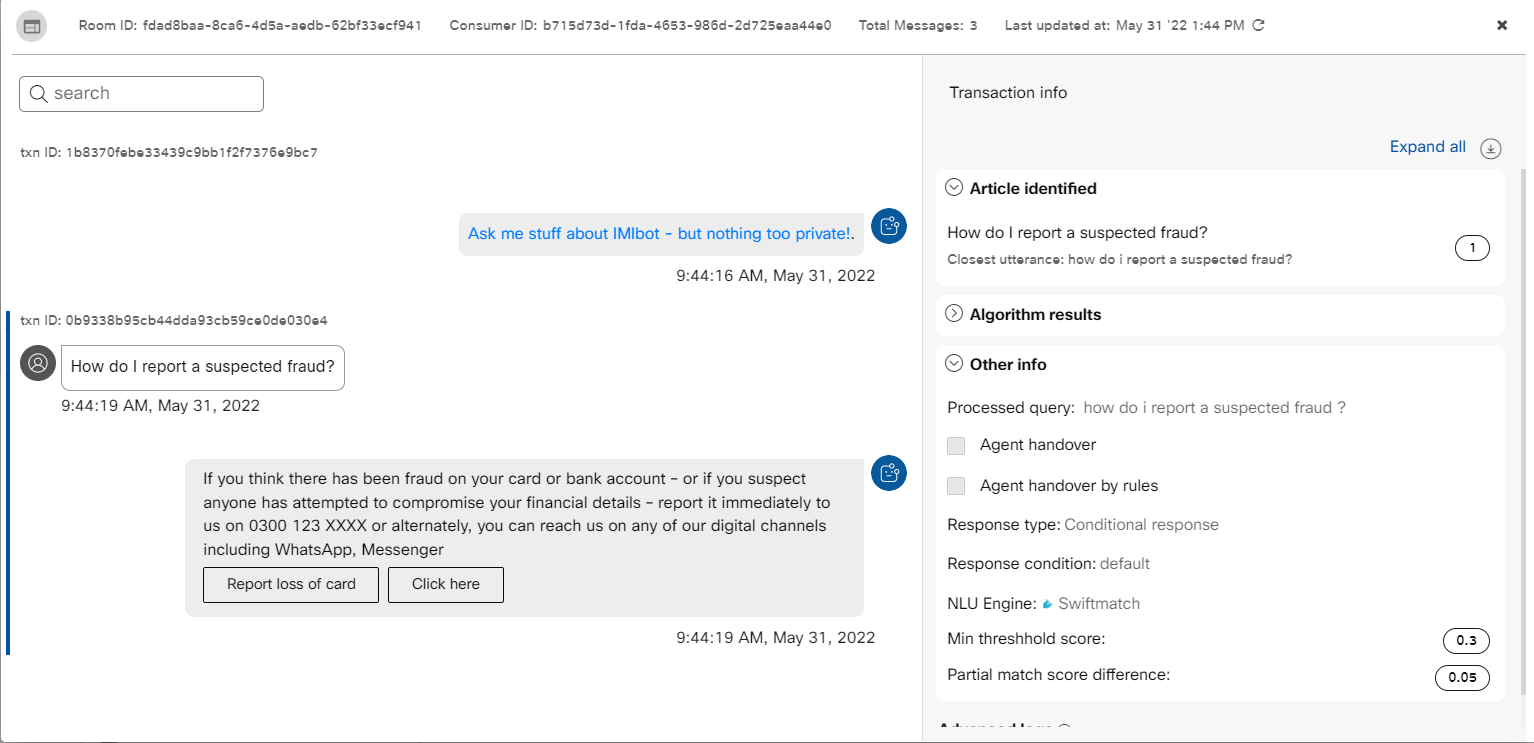

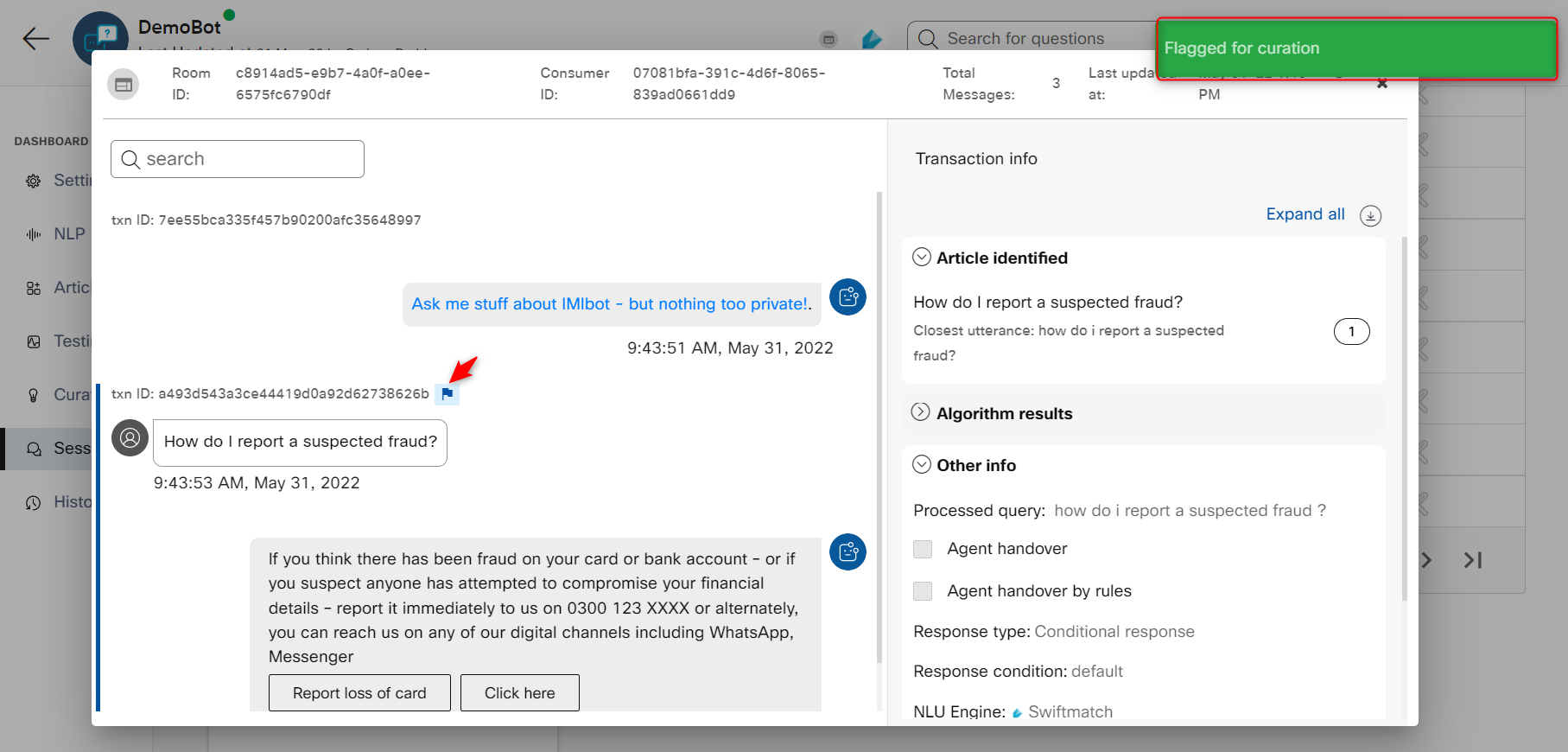

Session details of a particular session in the Q&A bot

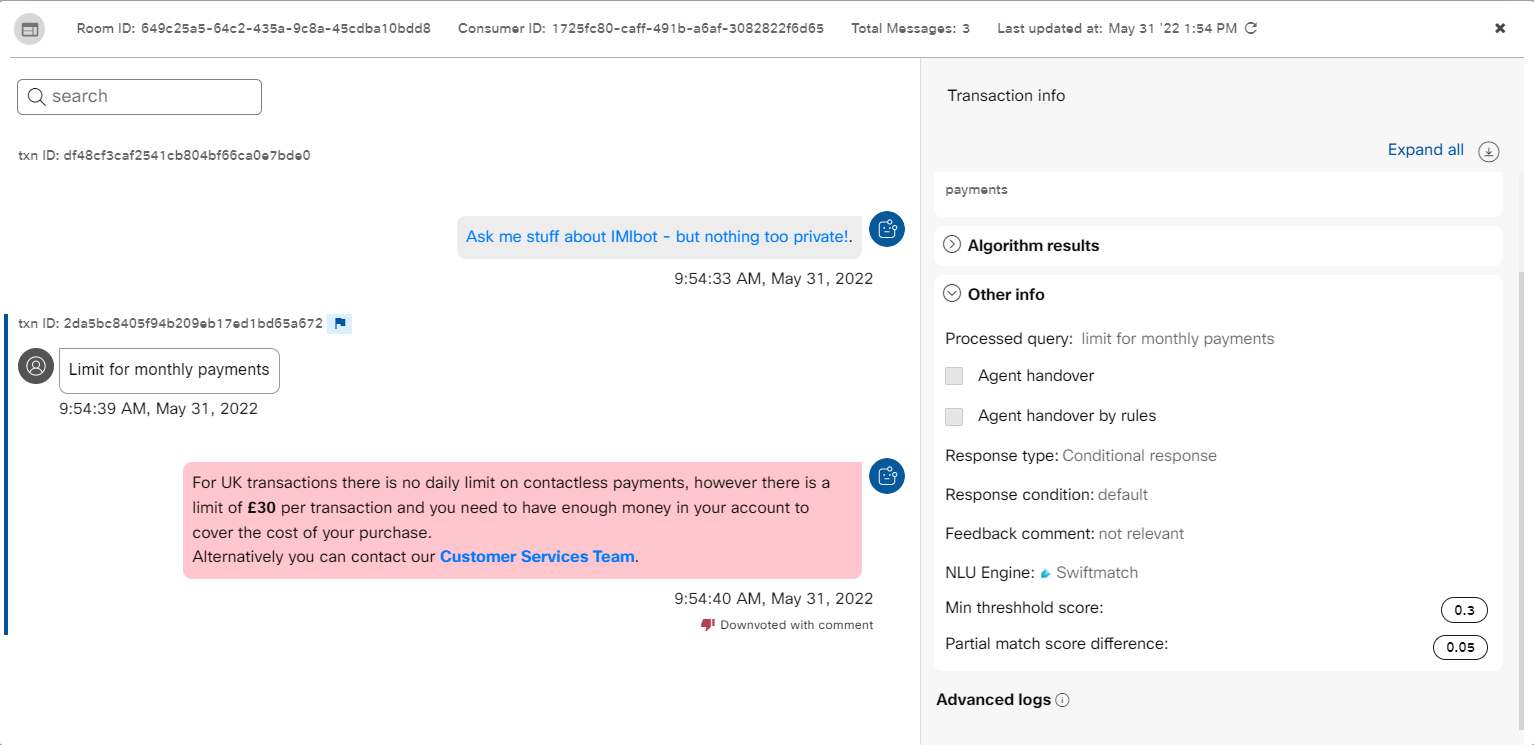

The session details open up as an overlay and show all the messages exchanged between the bot and the user for that particular session (or room).

Each transaction is a combination of user message and bot message. The Transaction Info tab provides the information in three sections for the Q&A bot:

- Articles identified section displays:

- The exact match and partial match articles that are identified for a consumer query along with the similarity score.

- Algorithm results section displays:

- The list of algorithm results along with the similarity score. The number of results to be displayed for each message depends on the value set on the Handover and Inference tab.

Transaction information of a specific bot response in the Q&A bot

- Other info section displays:

- The processed query preprocesses the user input using the bot's pipeline.

- The Agent handover check box. This is checked for transactions where an agent handover took place. In case, any of the rules set for the agent handover are fulfilled, the Agent handover by rules check box is also selected.

- The response type for the user query, which can be a code snippet or conditional response.

- The response condition that is fulfilled for the consumer query. This can be a default response or a condition name linked to a conditional response.

- The NLU Engine with which the bot corpus has been trained. The engines can be RASA, Switchmatch, or Mindmeld.

- The minimum threshold score and partial match score difference configured in Handover and Inference settings.

- The advanced logs display the list of debug logs associated with a specific transaction ID. The period up to

which the advanced logs are available in Sessions is for 180 days.

Message displayed for users who do not have permission to decrypt the session

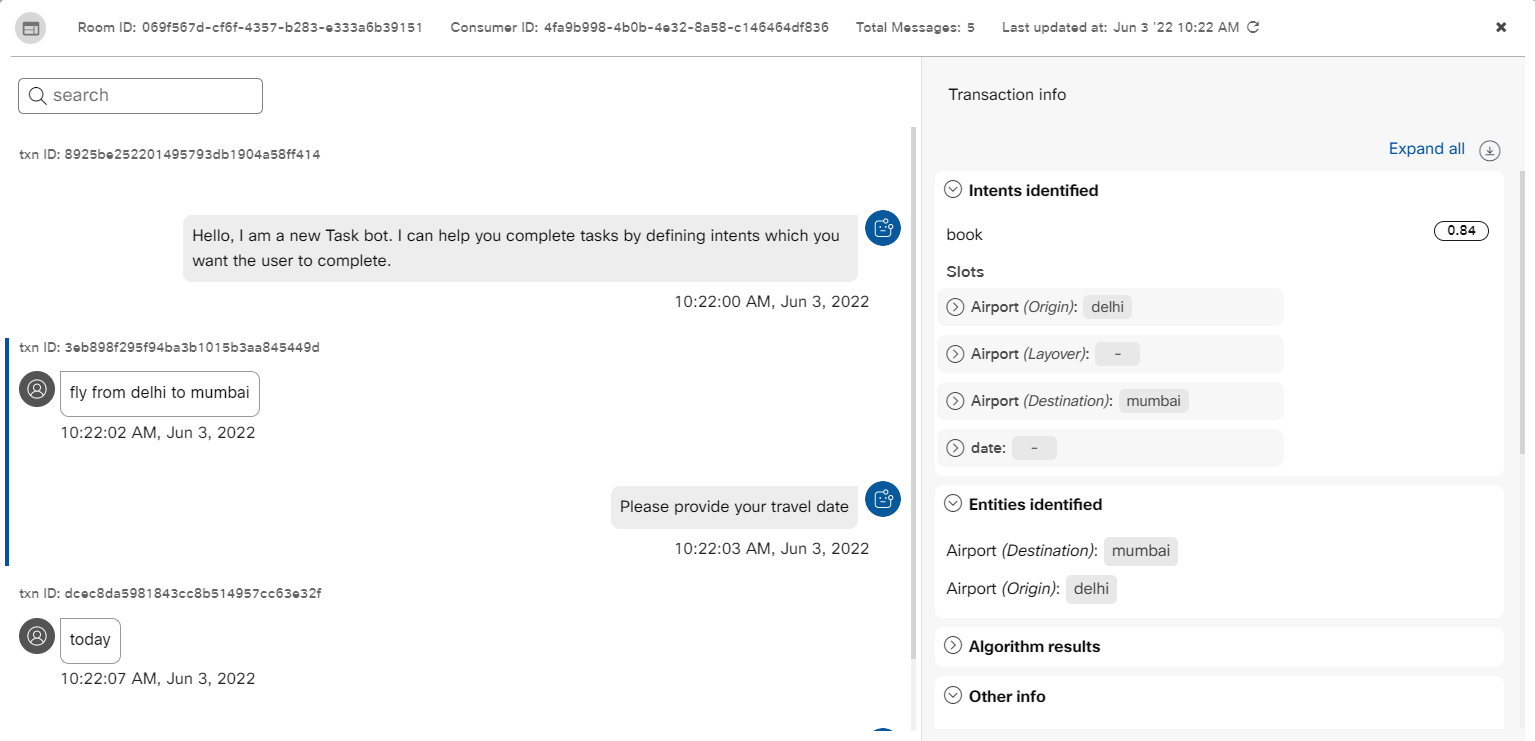

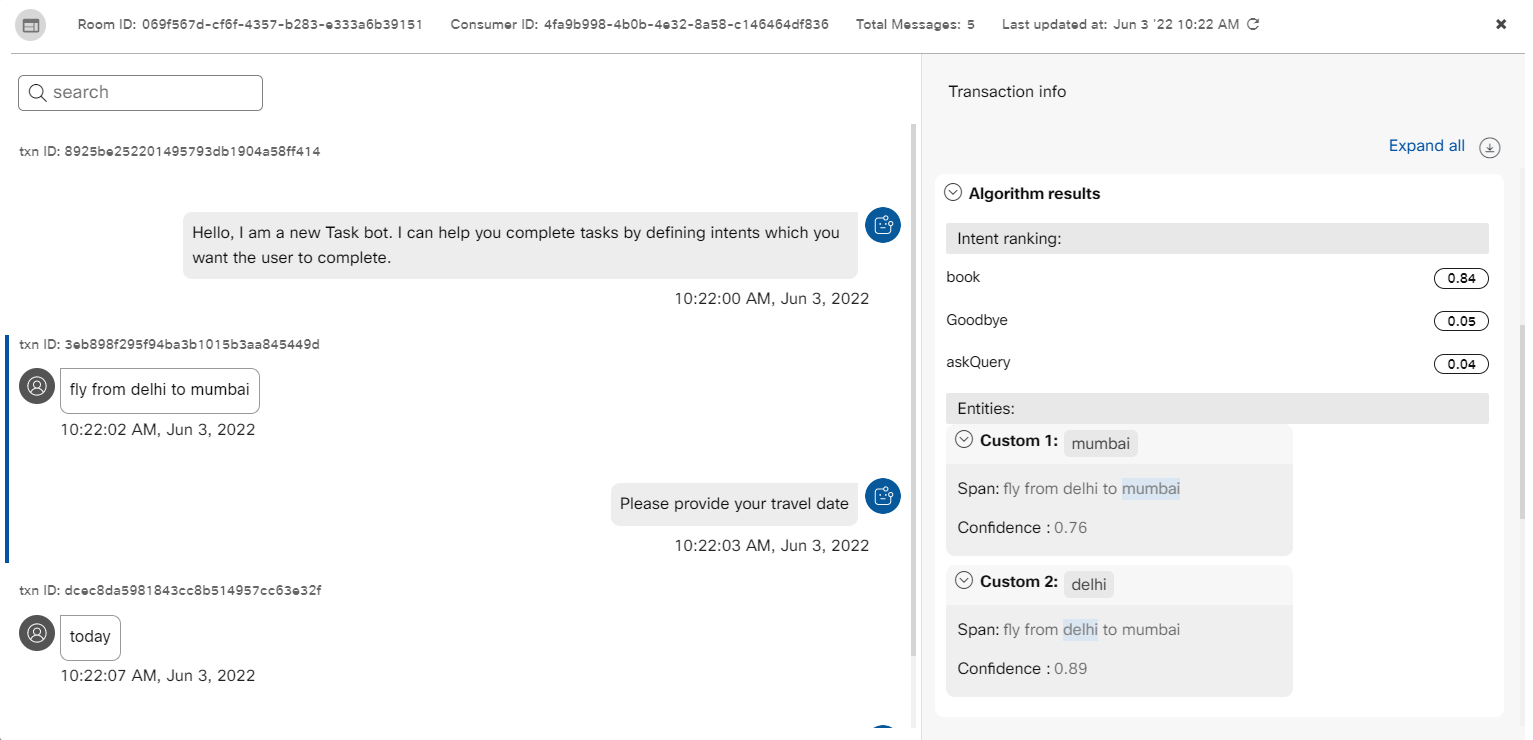

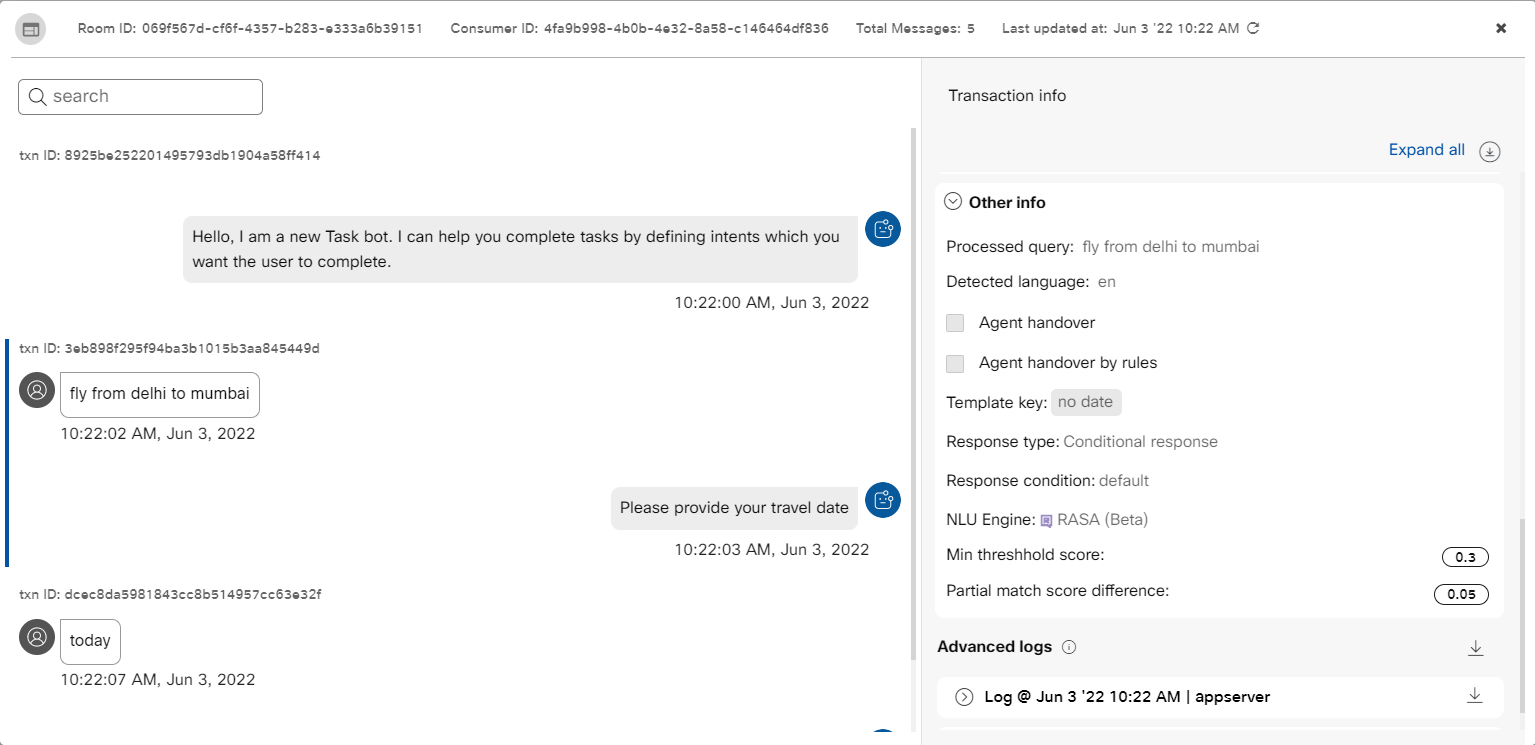

Session details of a particular session in the Task bot

The Transaction Info tab categorizes information into four sections for Task bots:

- Intents identified section displays:

- The intents that are identified for the consumer query, with the similarity score.

- The slot details that are linked to the identified intent based on the consumer query. Expand to view further details about each slot.

Intents identified for a consumer query

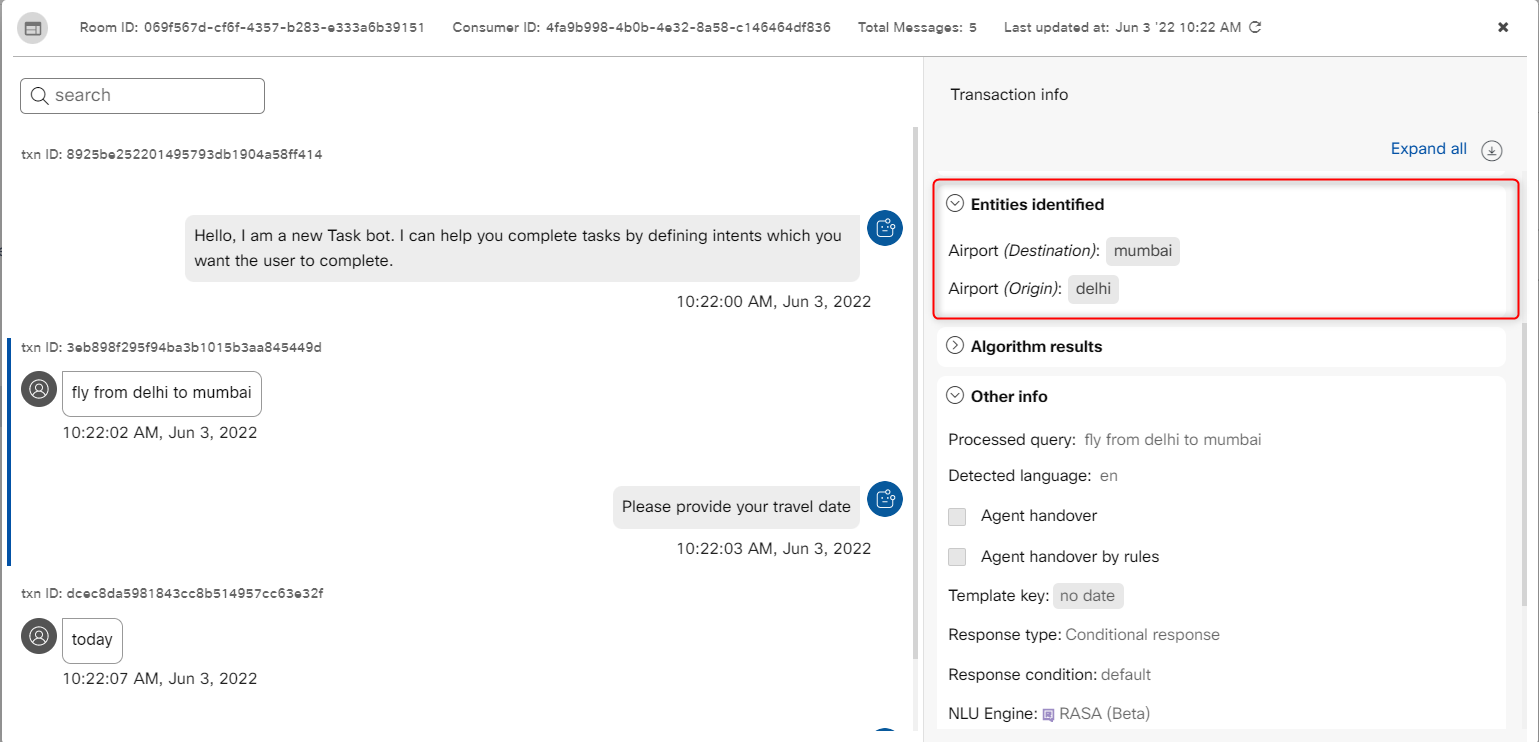

- Entities identified section displays:

- The entities extracted from user messages and that are linked to the active consumer intent.

Entities identified for a consumer query

- Algorithm results section displays:

- The list of algorithm results along with the similarity score for intents.

- Various entities extracted from the user message.

Algorithm results

- Other info and metadata section displays:

- The Agent handover check box. This is checked for transactions where an agent handover took place. In case, any of the rules set for the agent handover are fulfilled, the Agent handover by rules check box is also selected.

- The template key that is linked to an intent.

- The response type for the user query, which can be a code snippet or conditional response.

- The response condition that is fulfilled for the consumer query. This can be a default response or a condition name linked to a conditional response.

- The NLU engine with which the bot corpus has been trained, which can be RASA, Switchmatch, or Mindmeld.

- The minimum threshold score and partial match score difference configured in Handover and Inference settings.

- The advanced logs display the list of debug logs associated with a specific transaction ID. The period up to

which the advanced logs are available in Sessions is for 180 days. You cannot view the logs after this period.

Other info of a transaction

Note:

You can also download and view the transaction info in the JSON format using the download option.

There is another tab for NLP that contains metadata about the preprocessing of user input that took place using the bot's pipeline. There are FinalDF and Datastore tabs in smart bots that show those values corresponding to a particular transaction.

The session details screen comes with a Search bar. Bot users can also search within a conversation to quickly get to specific utterances if needed.

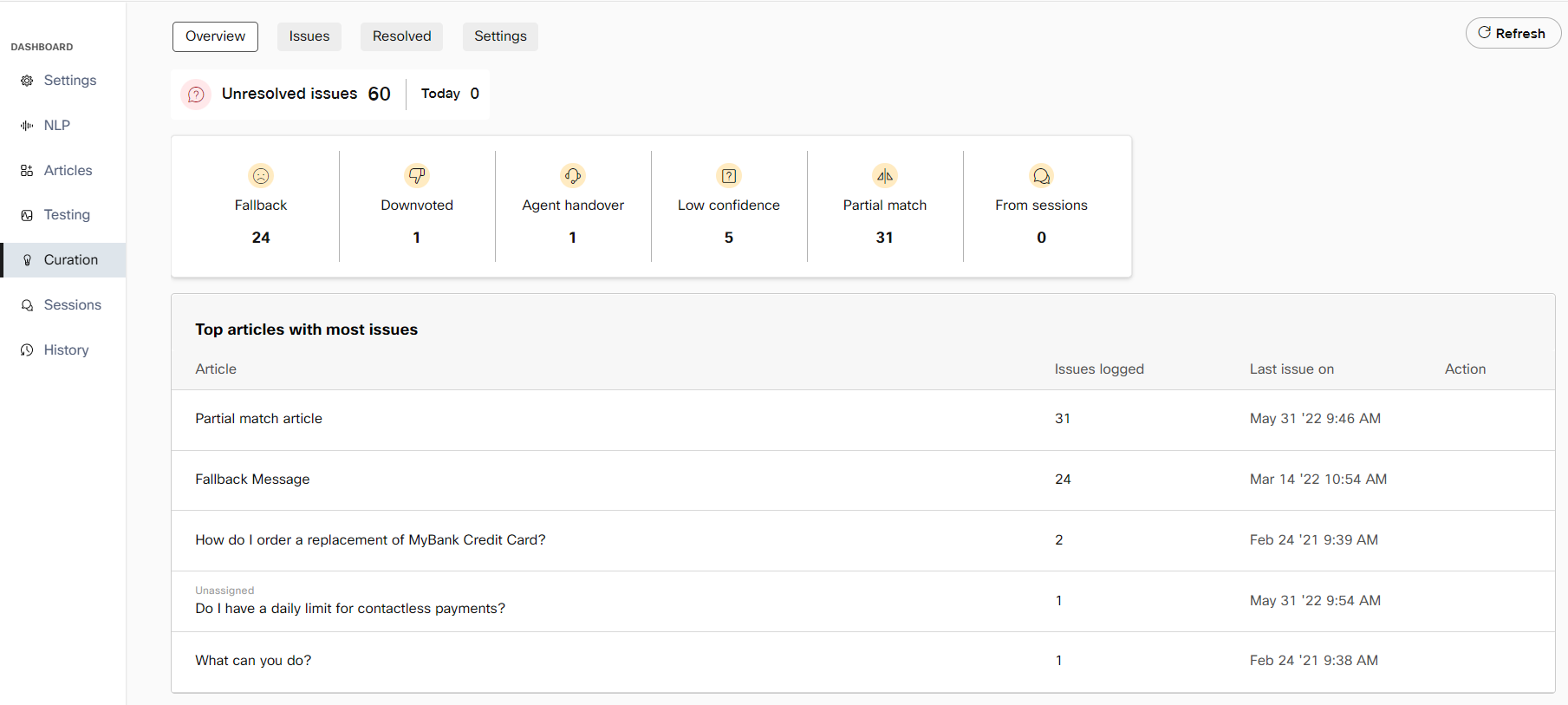

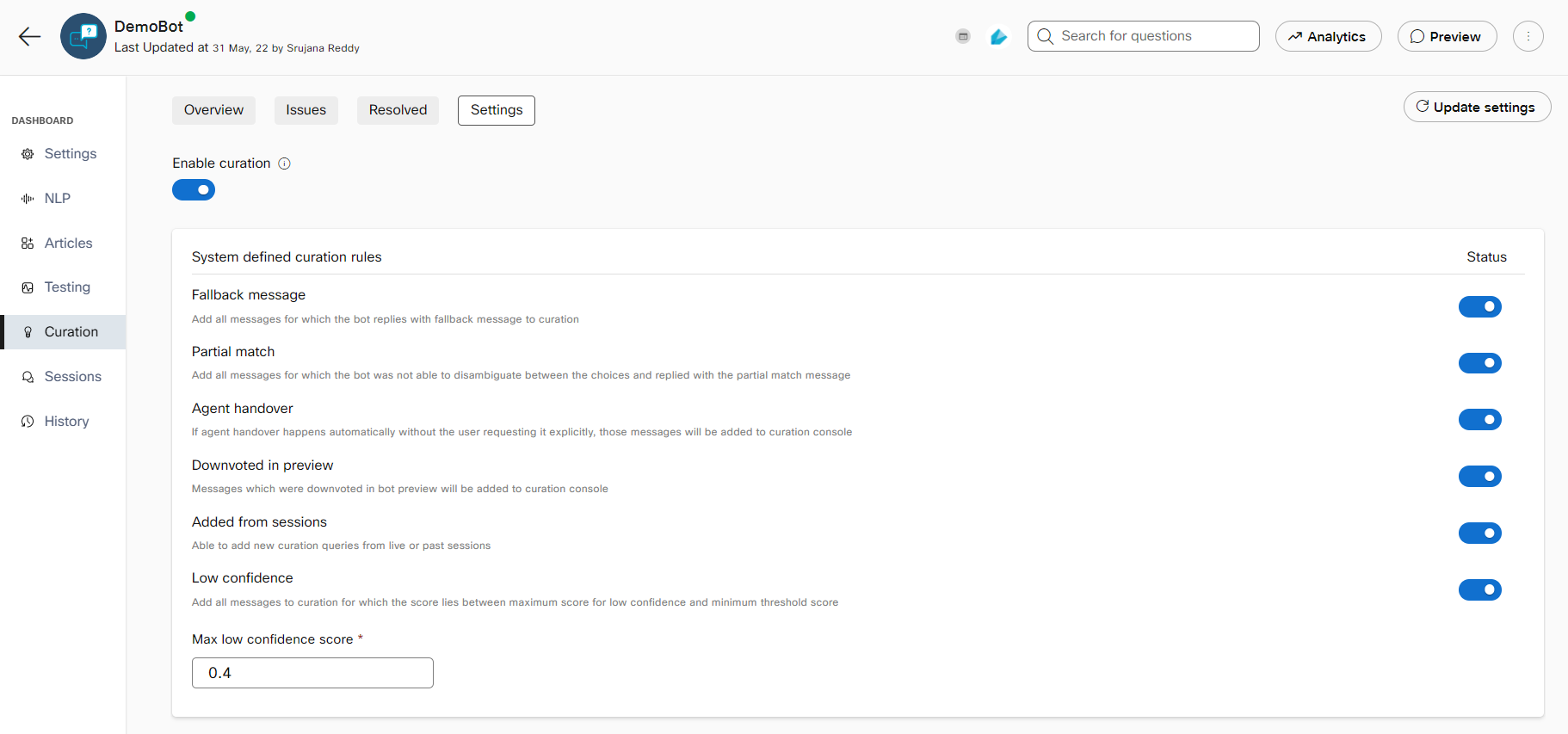

Curation

Curation console helps users in optimizing their bot's performance over time through human-in-the-loop learning. It facilitates users in reviewing cases where their bot performance was underwhelming. These can be curated to improve current articles/intents or to create new articles/intents. Incoming messages can end up in Curation based on certain rules and settings that will be discussed here. Users can enable or disable these settings based on their requirements. Curation console is available in both Q&A and task bots.

Curation settings in a Q&A bot.

Curation settings

Messages end up in Curation console based on the following rules:

- Fallback Message: The bot is not able to understand the user’s message. This happens when none of the articles clear the configured lowest threshold and the bot responds with fallback intent.

For task bots, there is an additional toggle for messages classified as 'default fallback intent'. Enabling this will send messages that trigger default fallback intent to the curation console. - Downvoted: Messages which are downvoted in bot preview will be added to curation console.

- Agent handover: This rule is fired when agent handover occurs by the rules configured in the Q&A Bot.

- From Session: Users can flag incoming messages that did not get the desired response from the session or room data.

- Low Confidence: Adds all messages to curation for which the score lies between the maximum score for low confidence and minimum threshold score. This score for low confidence can be configured.

- Partial Match: Adds all messages for which the bot was not able to disambiguate between the choices and replied with the partial match message

Curation settings in a task bot

Resolving issues

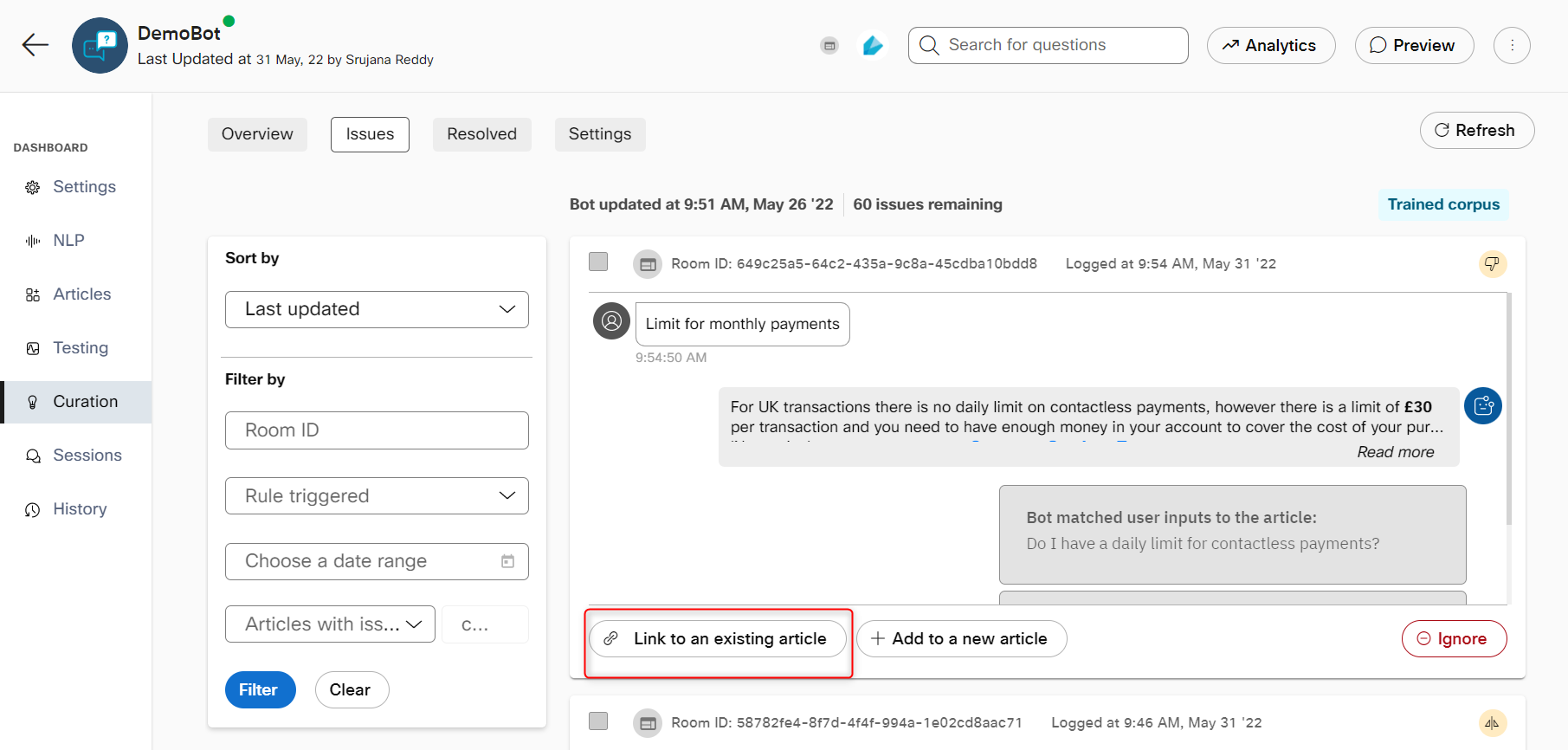

In the Issues tab in Curation console, users can choose to resolve or ignore utterances that ended up in the Curation console. Developers can view the user intents or utterances that ended up in curation as well as the bot response for that particular message. In addition to the text response, thumbnails of attachments such as images, videos, audios, and so on, will also be visible when present. The messages with attachments can be expanded to open the session view by clicking the thumbnails.

The permission to decrypt sessions and curations is granted at the user level. If the decrypt access toggle is enabled, the user can access any session using the Decrypt content button. However, this functionality is applicable only when the Advanced data protection is set to True or is enabled in the backend.

To resolve an issue, users can:

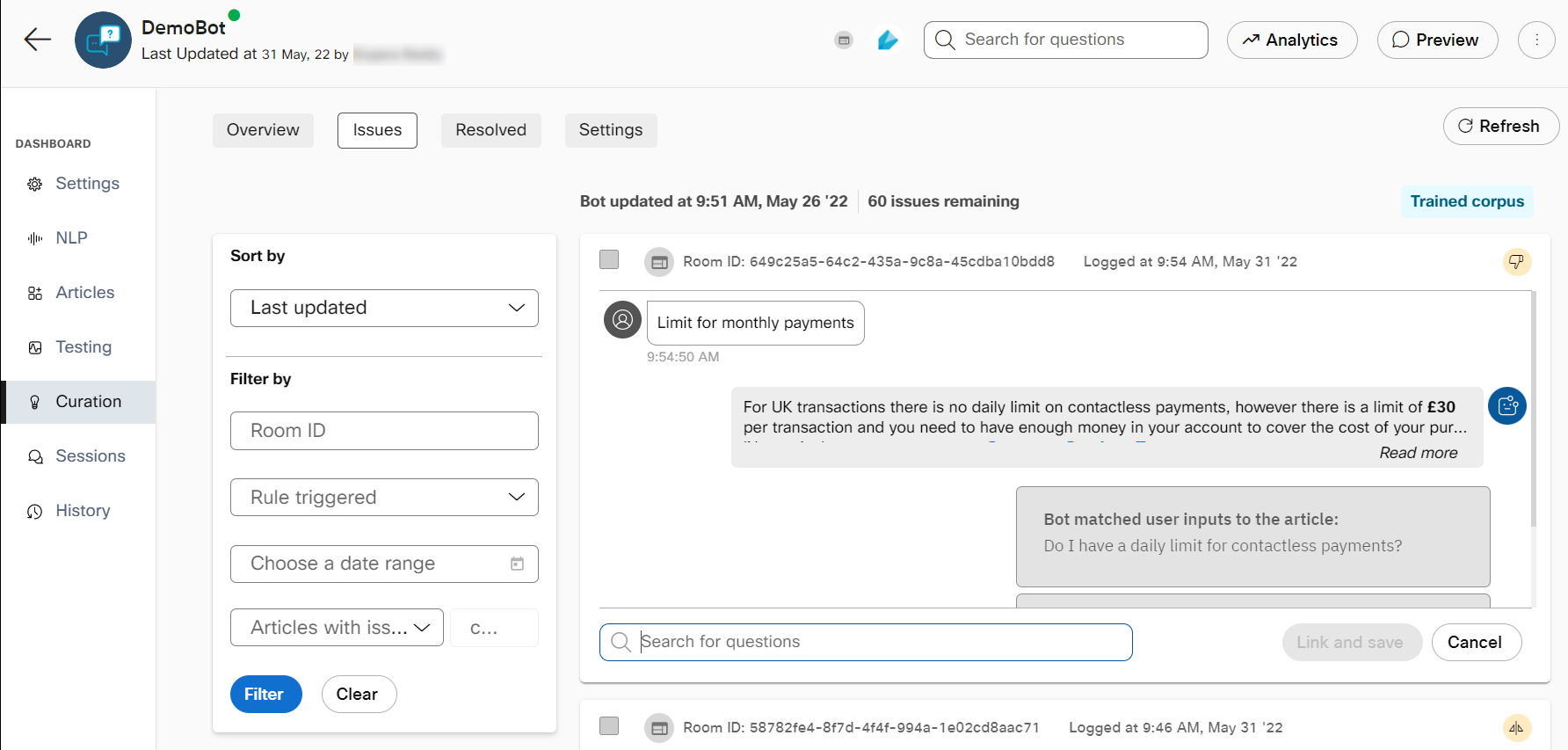

- Link to an existing article to add it to an existing article/intent, excluding the default articles such as welcome message, fallback message, and partial match. In Q&A bots, users can search for the article they want to link a particular issue to after selecting this option. User must use Link and save option to link the intent with the existing article.

In case, user tries to link utterances to any of the default articles, an error message will be displayed. For task bots, users can select the intent from a dropdown and tag any entities if present. - Add to a new article to create a new article/intent directly from the curation tab.

- Ignore query to not take any action for a particular issue and clear it from the Issues tab.

Once the issue is resolved, users need to re-train their bot. Users can also select multiple issues at once and resolve or ignore them together.

Curation issues in a Q&A bot

Search for the question to link the user intent that has ended up in curation

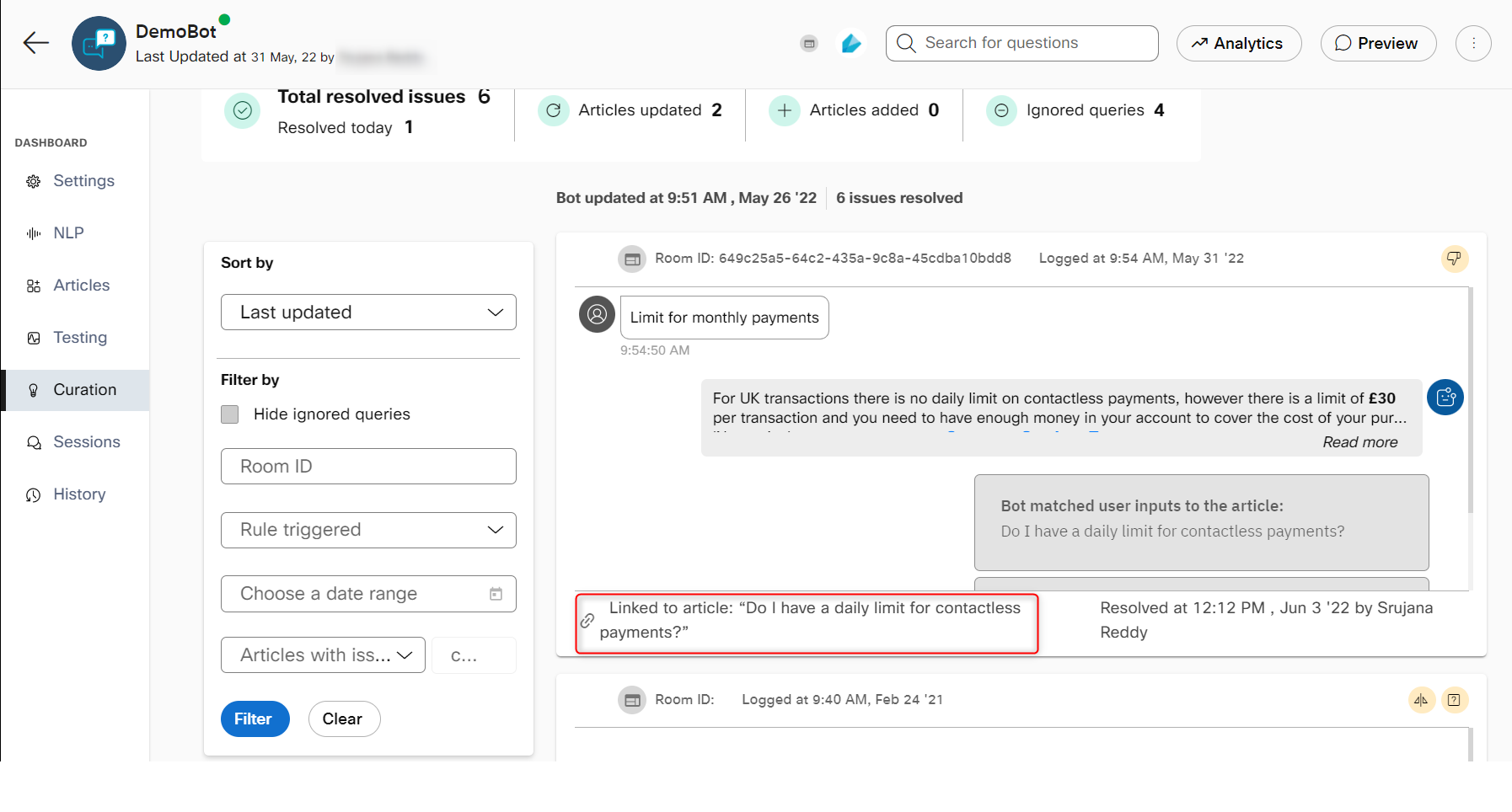

Users can view all the resolved issues under the Resolved tab. This screen consists of a summary of all the resolved issues and the type of resolution chosen. There is also a resolution method associated with each query that tells users whether an issue was added to an article/intent, used to create a new article/intent, or ignored.

Resolved issues in curation

Adding issues from sessions

If the bot gave out an undesired response to some utterances but these were not captured by any of the rules, users can choose to add specific utterances from the sessions to the curation console. Each transaction is associated with a Curation Status Flag, this can be toggled ON if the issue is not in the curation list. If the issue exists in the curation list, then the curation status flag changes colour indicating the users that the issue exists in the curation list.

Curation flag for each transaction in sessions

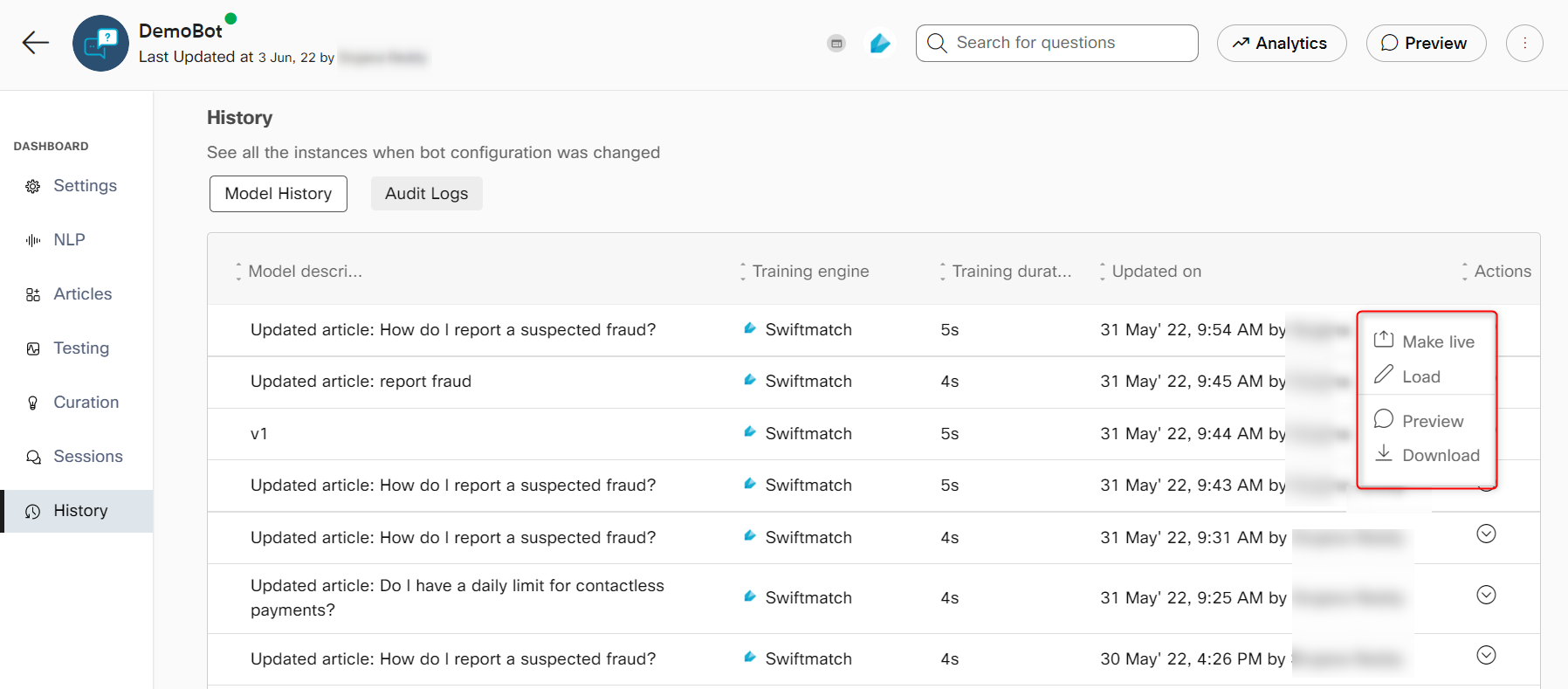

History

The Q&A and task bots require training whenever a new article, intent, or entity is added, and when an existing article, intent, or entity is updated. After every training, there is a need to check the bot performance. If the bot’s performance is not as expected, History page provides a provision to revert to an older training set without any hassle.

On the History page, you can:

- View when a corpus was trained

- View the changes made to the corpus that resulted in training

- View the training engine with which older iterations of the knowledge base or bot corpus were trained along with the training duration to train each of the iterations. The training duration is calculated by taking the start and end time of training the corpus and is expressed in hours, minutes or seconds.

- Track the changes made to the Settings, Articles, Responses, NLP and Curation sections of a bot

- Track the previously trained corpus that went live

Users can perform the following actions on the knowledge base right from the history section:

- Make Live: Users can change any of the past knowledge base statuses to Live.

- Edit: This feature enables a user to edit an article in the selected knowledge base. Editing an article creates a new clone for the existing knowledge base. Thus, permitting users to work even on the previous knowledge base.

- Preview: This feature enables users to evaluate the bot’s performance for any knowledge base throughout the bot’s training History.

- Download: This feature enables users to download the articles in CSV format. The download option is only available for Q&A bots.

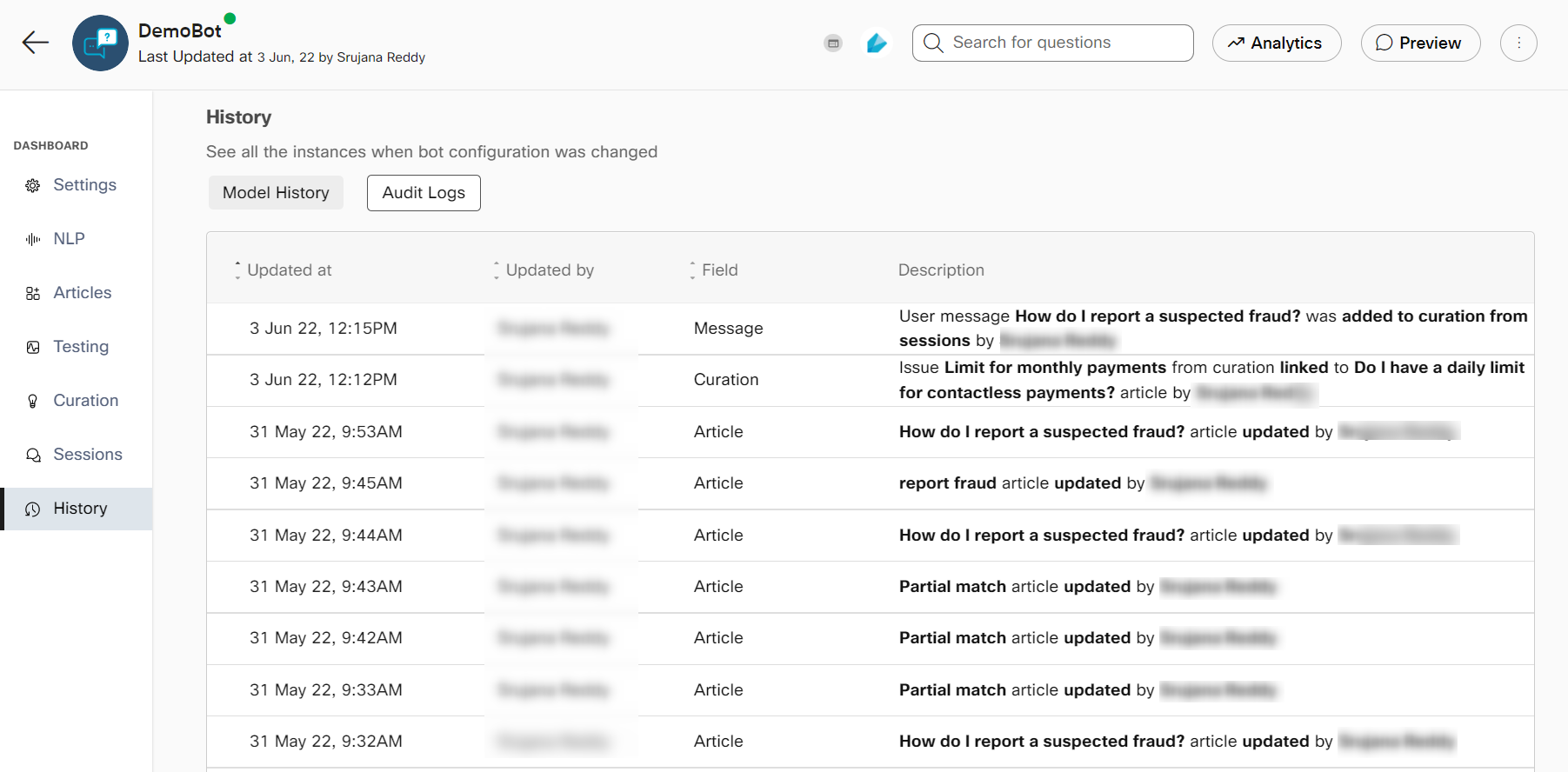

Viewing the Audit logs of Q & A and Task bots

This section tracks the changes made to the Settings, Reports, Articles, Responses, NLP and Curation sections of a specific Q & A or Task bot in the last 35 days. Users with Admin or Bot developer roles can access this section. Other users with custom roles that have the ‘Get Audit log’ permission can also view the audit logs.

- Select a specific Q & A bot on the Q & A bot tab of the Dashboard page.

Note: To review the audit logs of a task bot, click the Task bot tab on the Dashboard page. - Click History. The History page appears.

- Click the Audit logs tab to review this information:

Updated at

The date and time at which the change is made.

Updated by

The user who made the change.

Field

The section of the bot where the change was made.

Description

Any additional information about the change.

Note

- You can search for a specific audit log using the Updated by and Field search options.

- You can only view 10 corpora of a bot on the Model History tab.

Updated about 2 years ago